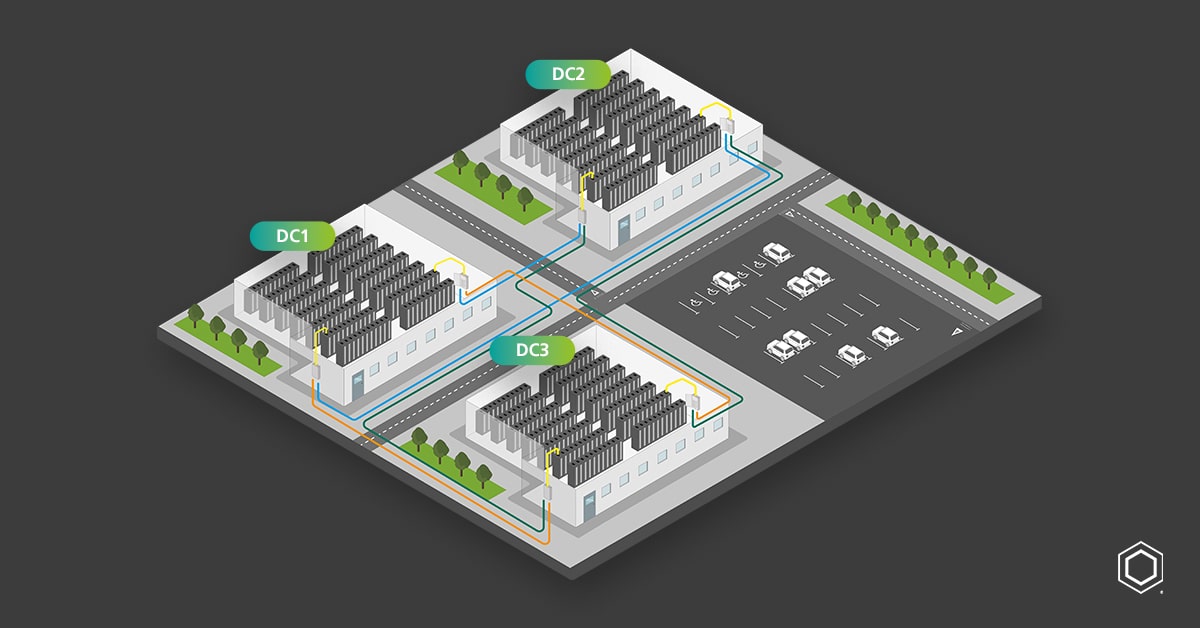

A Data Center Interconnect (DCI) fundamentally represents a direct link between two or more data centers, designed to facilitate high-speed, secure, and reliable communication and data transfer between these data centers. In a campus environment, DCI is typically deployed using high-capacity fiber to connect multiple data center buildings over a wide campus area.

These connections serve several critical purposes; They allow for the synchronization of resources, enabling real-time data replication, load balancing, and distribution of workloads. This contributes to improved efficiency and resilience, mitigating potential data loss or downtime risks.

DCI, The Ultimate Enabler For Hyperscalers

In recent years, DCI has also become a key efficiency driver for large and hyperscale data center operators, enabling them to extend their layer two or local area networks to span data centers. This enables them to operate multiple data centers as a single logical data center which allows them to realize benefits including expandability, workload mobility, resource optimization, and support multi-tenant environments across a broader set of distributed resources.

A critical factor for large data center operators is what is referred to as the network RADIX. This refers to the reach of the network in terms of resources. If a single network in a single data center building contains 300 cabinets, then the RADIX is 300 cabinets. But if we have four buildings joined to become one logical network through DCI being implemented, the RADIX becomes 1200 cabinets. Enabling these benefits without a suitable networking approach can cause issues with a larger fault domain, increasing the potential for issues; however, with the progress around policy-driven software-defined network architectures, these challenges can be overcome to deliver highly efficient environments.

How Does It Work?

In a campus scenario, DCI leverages an ultra-high fiber count backbone, facilitated through cable baskets or ducting, to interconnect the network from one building to another. These connections span from as little as 100m to 10km. The high-speed fiber used for the DCI backbone can reach astounding speeds, even up to 400GB, which can further be broken down into more manageable speeds like 100GB, 40GB, or 10GB at the server layer, ensuring an efficient and flexible network.

Incorporating a spine-leaf architecture, each building can be perceived as a leaf, with its internal network branching into local leaf switches. The ultra-high-speed DCI connections serve as robust spines, creating a high-capacity backbone that connects these buildings or ‘leaves’ and facilitates seamless workload mobility across the campus.

The high-speed DCI, with its ability to break down to various speeds, offers an efficient RADIX on the spine-leaf network. This means the network can accommodate a greater number of connections, hence enhancing scalability and performance. This, coupled with the spine-leaf architecture and high-speed fiber backbone, equips the campus DCI with an extraordinarily robust, flexible, and efficient networking solution.

The Practical Challenges

Implementing Data Center Interconnects (DCIs) on a campus can be complex and intensive, bringing several challenges. These can range from defining the most appropriate method, organization of labor and machinery, as well as the regulatory aspects involved in digging out tarmac if required.

With this in mind, it’s critical to try and future-proof DCI deployments to minimize the requirement for repeat efforts over time.

There are a few types of installation that can be used, such as ducting or aerial installation. Each carries unique difficulties, such as the risk of fiber damage during pulling or the need for special equipment in blowing and jetting when dealing with duct installations.

Critical considerations include maximizing fiber count within existing ducts to future-proof the network, balancing between maximizing capacity, and avoiding overcrowding that might damage fibers or hinder future expansion. High-density fiber cables can be a solution to this issue. AFL Hyperscale has gone to great efforts to reduce the cable diameter as much as possible to support this need while ensuring cable jackets offer as much damage resistance as possible.

While deploying DCI can seem daunting, the prize is certainly big enough in proportion to the effort for large data center operators and hyperscalers. Realizing the flexibility and resilience of a data center at campus scale rather than just within a single building offer efficiencies and significant savings over the life of a data center.

Partnering with organizations such as AFL Hyperscale, who are both rich in experience and pioneering in their approaches to innovation in this space, can help save costly mistakes and ensure highly optimized, future-proof deployments which serve as assets for years to come and don’t become liabilities within a 3-year life span.