HYPER SCALE

Hyper – Prefix, excessive, greater than usual

Scale – Verb, to change in size or number

Composed of the words hyper (extreme, excessive, or greater than usual) and scale (to change size), the term hyperscale doesn’t have an official dictionary definition – yet! Within a computing context, the term refers to the ability of a large network of computers (tens of thousands and more) to automatically respond to changing demand and assign jobs to processing, storage, and network resources as needed.

To put this into context, when the season 8 premiere of popular HBO series Game of Thrones aired, a staggering 17.4 million people tuned in to watch, 5.6 million of which were streaming online – 5.6 million devices simultaneously relied on a hyperscale network to deliver one hour of TV.1 These numbers were record-breaking for HBO and for video streaming in general and this all depended on a hyperscale computing infrastructure that could grow and shrink as people tuned in or out at different times.

Put simply, hyperscale is the ability for a computing system to adapt in scale to the demands of a workload. It is this type of computing power and network scale that drives video streaming, social media, cloud computing, software platforms, and big-data storage. These workloads are only set to grow as the world we live in becomes more connected and more people and things use cloud services.

What are the key characteristics of a Hyperscale Data Center?

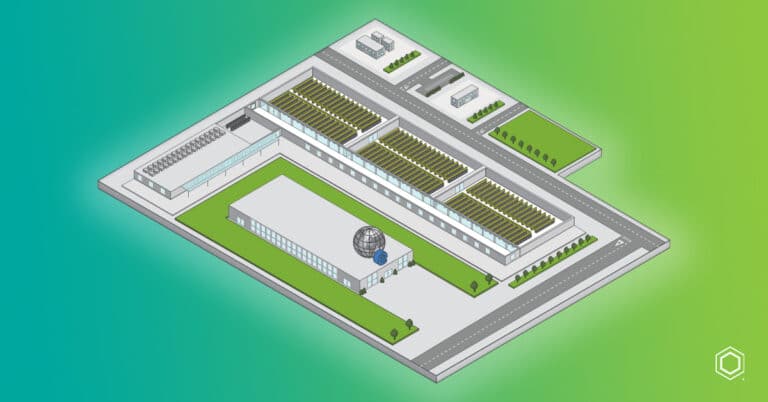

Hyperscale has shifted IT networks from on-premise computer rooms to huge fleets of data centers. There are three key characteristics that really help to define hyperscale: the physical structure, how incoming traffic is processed, and how software is used to automate different functions.

Physical Structure

The hyperscale function does not exist in one data center but in a unified network of data centers and other elements. A hyperscale network can be thought of as a host of interconnected computers and has evolved to be fleets of data centers organized into highly connected, low-latency clusters, some next door to one another, others hundreds of miles apart, some at a multi-regional level, and others at a global level.

Hyperscale data centers are built as an interconnected fleet as opposed to just one huge data center to spread risk. These large networks are housed across multiple sites to protect from factors such as natural disasters, power outages or security threats. Locating data centers in different regions and countries puts capabilities close to customers and addresses data security practices and regulations.

Incoming Traffic

The way in which a hyperscale provider receives incoming traffic into its network of data centers is as important as how they process requests within the data center.

Hyperscale operators manage extended networks that interconnect their customers and data centers. Traffic is received at network edge sites,

many of which are co-located with Internet service providers, co-location data centers and Internet exchanges. Traffic is routed by the hyperscale operator to a selected global cluster of data centers, often called a region. Within a region, there may be multiple discrete data centers, often grouped in availability zones.

The hyperscale operators may own the network connections, lease cables, fibers or wavelengths from a communication service provider or use tunneling protocols to make virtual connections over the public Internet.

Software Automation

Made up of millions of servers, hyperscale computing networks are managed in an overlaid software environment, ready to receive requests and send them to the appropriate resource – this is known as software automation.

Software automation encompasses provisioning and orchestration. Provisioning is the setting up of a server for use in the network but may also refer to the installation of the operating system and other system software. Orchestration is how the network manages and monitors its workload or server capacity and determines if any requests need to be rolled over to other servers to accommodate an increase in traffic.

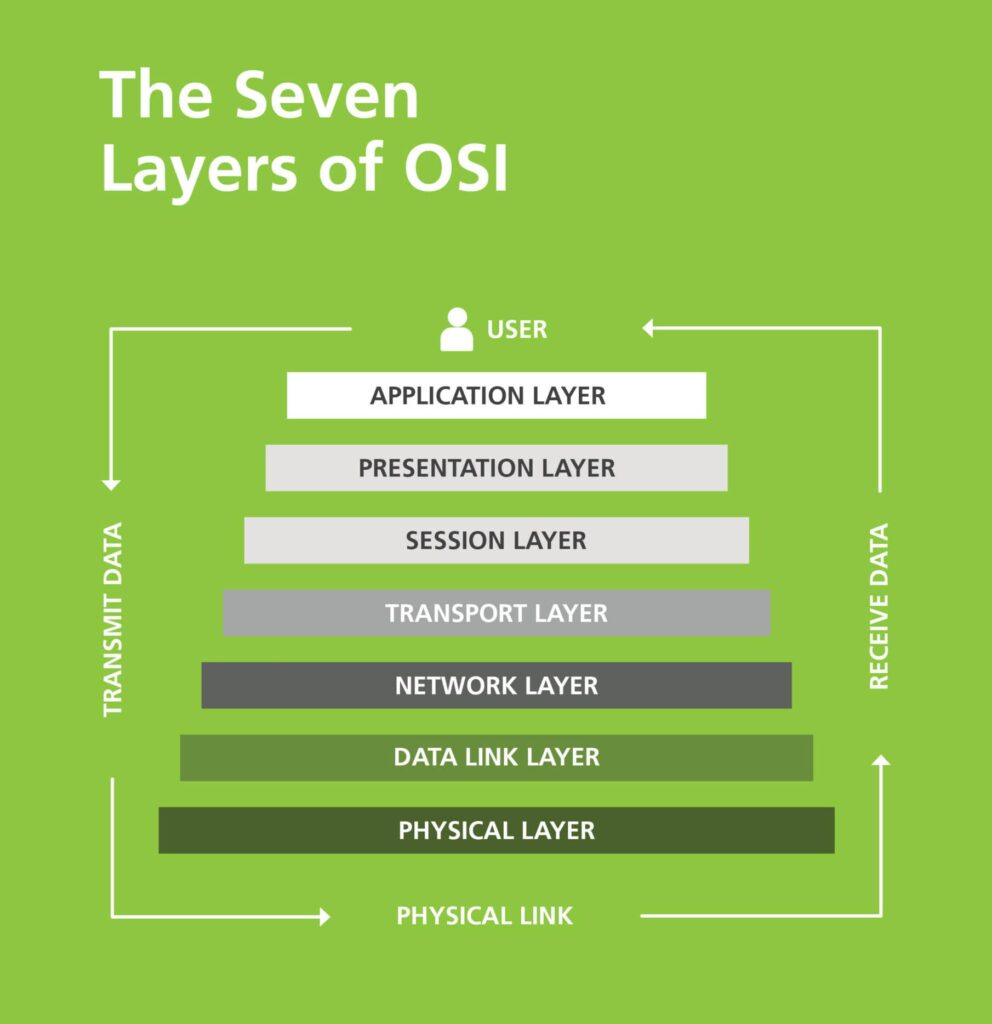

Interconnectivity has emerged as one of the most important considerations in network planning for hyperscale computing operators or hyperscalers. In the seven-layer OSI (Open Systems Interconnection) model of computer networking, bit transmission through various types of media sits at Layer 1. The passive network which underpins the 7 layer OSI model is the cabling and connectivity referred to as Layer 0 – or the PHY in the TCP/IP model. Layer 0 is of paramount importance for hyperscale (or indeed any Internet protocol) to operate as it is the key to ensuring consistent, reliable, fast communication between network devices.