Hyperscale has reshaped business, commerce, and society through the delivery and availability of IT services and the future of hyperscale can be divided into two categories: technological and commercial. In this article, we examine what the future holds for hyperscalers from a technological standpoint.

Hyperscalers need to keep their eye on the speed of innovation and growth of deployment of new technologies. With the emergence of artificial intelligence, 5G, Internet of Things (IoT), augmented reality, and autonomous vehicles, the demand for data is set to grow steadily then take off fast.

All these applications will drive the need for more data, faster, simultaneously ensuring real-world suitability whether through personal safety in the case of autonomous vehicles, or user experience for augmented reality.

This growth in data consumption is evident if we simply examine the growth that has occurred in the past year. A lot can happen on the Internet in a minute.

Connectivity

The ability for hyperscalers to be globally available and offer low-latency connections in any country is of critical importance.

Hyperscale data center fleets are already racing to grow their footprints in Europe, Asia-Pacific and North America, with the focus now turning on establishing stronger footprints in South-America, the Middle East, and Africa.

This brings with it the need for higher levels of fiber connectivity, and a stronger global circulation of subsea cables, cable landing stations, exchange nodes, and emerging edge data centers. This will all translate to higher fiber counts running into and out of the data center.

Speed

The exact future roadmap of speeds in hyperscale data centers is uncertain but the evolution has been steady, from the early days of 100Mb/s to 1Gb/s

to speeds of 10Gb/s, 40Gb/s, 100Gb/s, and even the recent migration to 200Gb/s and subsequently 400Gb/s. With this roadmap, it is very likely that network speeds will advance to 800Gb/s and 1.6Tb/s in future.

100Gb/s is a common speed within hyperscale data centers today and can be achieved via 4 x 25Gb/s channels, be that fiber pairs or wavelengths, using QSFP (Quad Small Form Pluggable) optical transceivers. The heartbeat of the data center is running at 25Gb/s, including intra-data center links.

100Gb/s is a common speed within hyperscale data centers today and can be achieved via 4 x 25Gb/s channels, be that fiber pairs or wavelengths, using QSFP (Quad Small Form Pluggable) optical transceivers. The heartbeat of the data center is running at 25Gb/s, including intra-data center links.

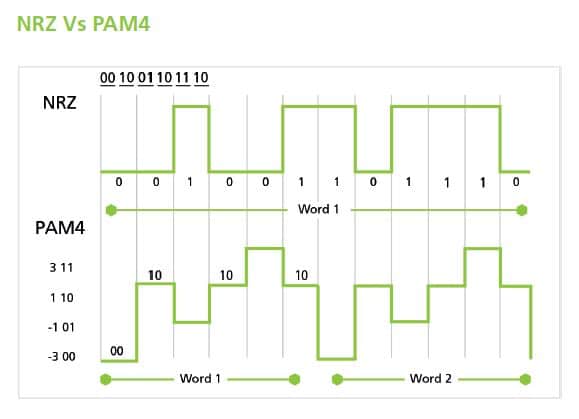

In terms of achieving 400Gb/s, there are numerous ways to do so in multi-source agreements: 4 x 100Gb/s, 8 x 50Gb/s, 16 x 25Gb/s, and so on. However, another method of achieving these speeds is emerging: PAM4. Moving away from the traditional Non-Return to Zero (NRZ) transmission mechanism, Pulse Amplitude Modulation (PAM) essentially allows more bits to be transmitted in the same amount of time on a serial channel.

Packing more data into the same timeframe is achieved using different signaling levels, multi-level signaling (MLS) or pulse amplitude modulation (PAM). NRZ itself is a two-level MLS or PAM-2 system but PAM-4 has four distinct levels to encode two bits of data, essentially doubling the bandwidth of a connection.

As with a lot of aspects of network connectivity, there are multiple ways of finding a solution. Who does it best and at the lowest cost remains to be seen and we don’t know exactly which configurations will be at the forefront. What we do know is that the need for more information to be transmitted by the second will only continue to grow.

Quantum Computing

Quantum computing is the area of study focused on developing computer technology based on the principles of quantum theory, which explains the nature and behavior of energy and matter on the quantum (atomic and subatomic) level. While current computers manipulate individual bits, which store information as binary 0 and 1 states, quantum computers leverage quantum mechanical phenomena to manipulate information. To do this, they rely on quantum bits or qubits.

Using quantum computing means bits can simultaneously exist in different states, enabling you to make them interact with one another on a much richer level, with the outcome of many more possibilities of state that come out of a simple interaction rather than simply binary. The power of quantum computing means complex problems can be solved and analysis can be done on a much larger scale than ever previously imagined. Quantum computers could mean breakthroughs in the fields of science, medicine, finance, and engineering, to name but a few.

As hardware has improved over the years, people have used more feature-rich applications. Personal computers went from being driven by command lines to GUIs, and the World Wide Web went from being a very rudimentary web browser to the Internet as we know it today. Over the years, applications and hardware have grown together supported by network connectivity with this to continue as we move on to quantum computing and beyond.

Quantum computing and the data involved has the potential to transform the hyperscale landscape.

Google has already claimed to have achieved “quantum supremacy” for the first time, reporting that their experimental Sycamore quantum processor has completed a calculation that would take a traditional supercomputer 10,000 years in just 200 seconds.

As data center revenue grows and the demand for data increases, the expansion of the hyperscale data center infrastructure will continue. With that, commercial drivers, as always, will focus largely on speed and efficiency. Watch this space for the next article in the series where we take a look at the commercial factors that will determine the future of hyperscale.

Want to learn more? Read the full What is Hyperscale? e-book! Learn about the evolution of hyperscale data centers, the challenges hyperscale networks face, and what the future looks like as demand for data and connectivity continues to grow.